1. Introduction

At SVAIL (Silicon Valley AI Lab), our mission is to create AI technology that lets us have a significant impact on hundreds of millions of people. We believe that a good way to do this is to improve the accuracy of speech recognition by scaling up deep learning algorithms on larger datasets than what has been done in the past. These algorithms are very compute intensive, so much so that the memory capacity and computational throughput of our systems limits the amount of data and the size of the neural network that we can train. So a big challenge is figuring out how to run deep learning algorithms more efficiently. Doing so would allow us to train bigger models on bigger datasets, which so far has translated into better speech recognition accuracy. Here we want to discuss a new technique for speeding up the training of deep recurrent neural networks.

2. Mapping Recurrent Layers To Hardware

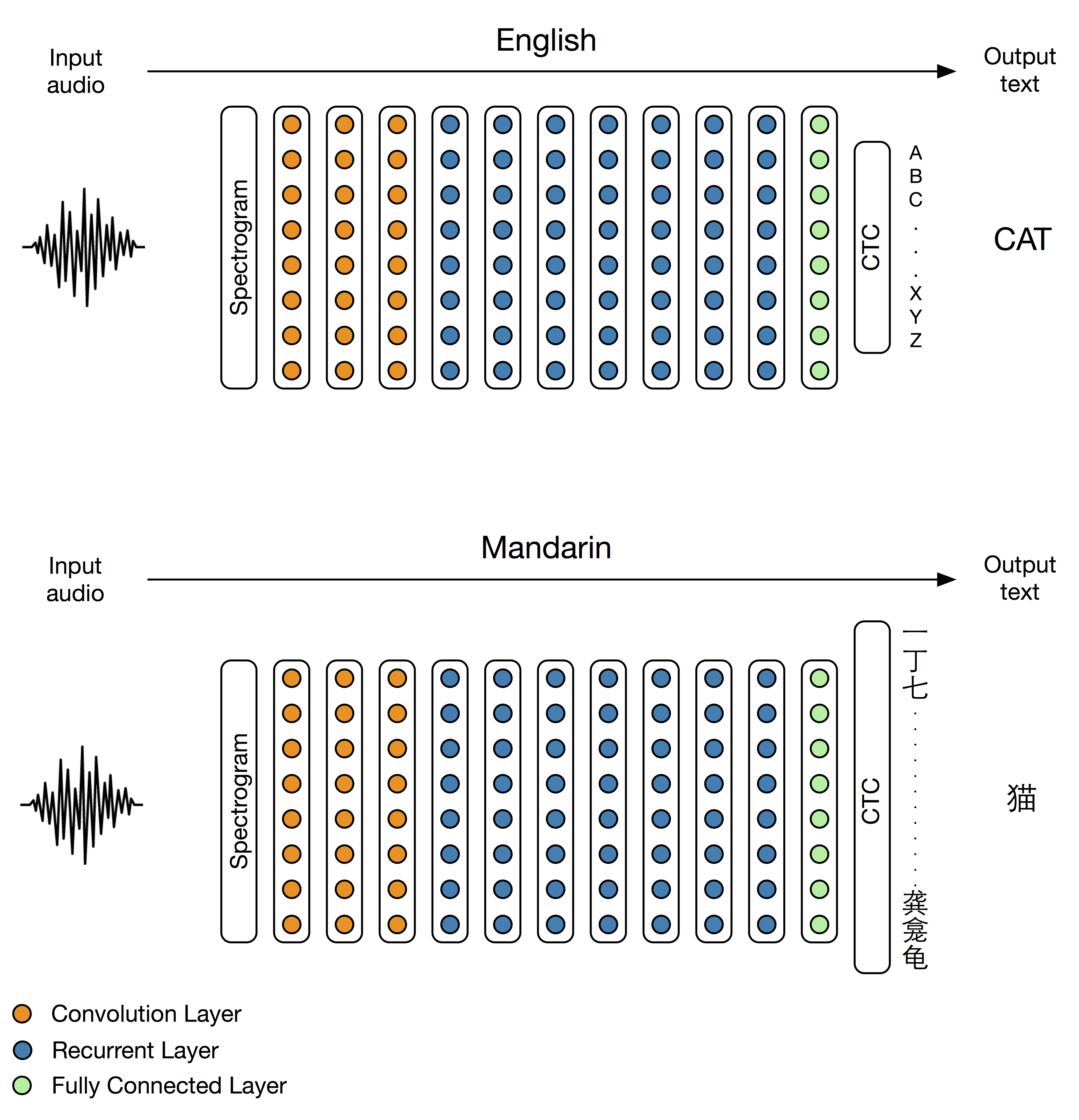

The most computationally intensive part of both of our speech recognition models are the recurrent layers (shown in blue in the figure above), so this optimization is directed at this part of the network.

2.1. Implementing RNNs Using Matrix Multiplications

A natural way to implement a recurrent neural network is with a series of matrix multiplication operations as described in our previous blog post and shown in the figure above. This involves loading the recurrent weight matrix U and the activation vector H for each timestep from off-chip memory.

In high performance processors such as GPUs, off-chip memory is much slower and much less efficient than on-chip memory such as register files or caches. So matrix multiplications are most efficient when the mini-batch size is relatively large (about 64 or higher per GPU) because the recurrent weights can be loaded from off-chip memory once, and reused over each sample in the mini-batch.

However, using a large mini-batch has several downsides:

- It increases the amount of memory needed to train a network.

- It uses up available data parallelism within a single GPU.

- It complicates the deployment of models.

It should be clear that using a large mini-batch per GPU requires more memory. When training RNNs over many timesteps, there is actually much more memory required to store the activations than the network weights. For example, storing the weights for a simple RNN with 1200 units in 32-bit floating point requires about 5.7 MB of memory, but storing the activations for a mini-batch size of 64 and 700 timesteps requires about 215 MB of memory. So an increase in the mini-batch size directly translates into a proportional increase in the memory required to train a model.

It should also be clear that a large mini-batch per GPU uses up available data parallelism that could have been used to spread the computation over multiple GPUs. Using an algorithmic mini-batch size of 512 allows the use of 128 GPUs at a mini-batch-per-GPU size of 4, but only 16 GPUs at a mini-batch-per-GPU size of 64. Training a single model with 128 GPUs running at close to peak efficiency may seem extreme to many readers, but it is important to us because it allows us to test the hypothesis that speech recognition accuracy continues to improve with network size and data capacity.

Finally, requiring a large batch size per GPU the complicates deployment of RNNs by requiring that multiple user streams are scheduled to be processed simulatenously on the same GPU. This is extremely difficult in embedded applications (e.g. speech recognition running on a cell phone) because there will typically only be a single user. It is also somewhat difficult for cloud based deployments because multiple user streams must be scheduled concurrently on a single server to obtain good energy efficiency, but not too many streams such that latency is increased.

2.2. Using Persistent Kernels

So we would like to find a way to load recurrent weights once and reuse them multiple times without increasing the mini-batch size.

This would allow us to:

- Train bigger or deeper networks with the same hardware.

- Scale network training to more GPUs.

- Make the deployment of models with few simultaneous users more efficient.

For a GPU, the largest source of on-chip memory is distributed among the individual register files of thousands of threads. For example, the NVIDIA TitanX GPU has 6.3 MB of register file memory, which is enough to store a recurrent layer with approximately 1200 activations. Persistent kernels exploit this register file memory to cache recurrent weights and reuse them over multiple timesteps.

However, if individual threads are each working on a subset of the network weights, then they must communicate to aggregate partial results for the current timestep together as well as to read the updated activations for the next timestep generated by other threads. This means that thousands of GPU threads need to communicate and synchronize with each other between each timestep. This type of synchronization is not supported by CUDA or OpenCL because GPUs do not guarantee that more threads than a single thread block will run concurrently. However, they commonly do run many threads concurrently, especially on larger GPUs like the TitanX. We can work around this limitation by implementing a form of preemptive multitasking on the GPU, where threads attempt to synchronize directly using a global barrier, but eventually time out and exit. A runtime system on the CPU monitors threads that have exited early and restarts the kernel (reloading the weights from memory) until all of them succeed. In practice the global barrier almost never times out if this is the only program using the GPU and we are careful to not launch too many threads.

This approach significantly improves performance at small batch sizes compared to implementations based on matrix multiplications. Performance at a batch size of 4 goes from about 90 GFLOPs to 2.8 TFLOPs, approximately a 30x speedup.

2.3. Possible Alternative Approaches

For a given performance problem, there are often multiple possible solutions. This section describes techniques other than Persistent RNNs that could be used to reduce the amount of memory required to train RNNs and to reduce the mini-batch size per GPU. We have found that they were less effective than Persistent RNNs for our speech recognition models. However, these techniques may be beneficial in other situations.

- Truncated back propagation through time

- Use of CPU memory to cache activations

- RNN model parallelism

2.3.1. Trancated back propagation through time

Truncated back propagation through time reduces the memory required to store activations during back propagation by performing forward and backward propagation over a fixed number of timesteps before moving on to the rest of an utterance. This approach can significantly reduce the amount of memory required to train a network because only the activations for a fixed number of timesteps need to be saved, but this comes at the cost of losing gradient information for long range dependencies through time. For our systems, we found that it results in about a 20% relative loss in speech recognition accuracy compared to performing back propagation on an entire utterance. So we choose to not use truncated back propgation through time for our speech recognition models.

2.3.2. Caching activations in CPU memory

Another way to circumvent the GPU memory limit is to cache the activation data for back propagation in CPU off-chip memory, which is typically much larger than GPU off-chip memory. This is a specific case of a more general strategy of storing activations for back propagation in higher levels of the system memory hierarchy (e.g. CPU DRAM, SSD Caches, Disks, etc). This approach can be effective if the time needed to copy the data back and forth from CPU memory is lower than the time needed to perform the arithmetic operations required by forward and backward propagation through the network. However, in our case, our network evaluation on the 8 GPUs in a single node is so fast that streaming the activation data back and forth from CPU DRAM would result in a 2-4x slowdown of the entire system. Additionally, it would steal processor interconnect bandwidth from the all-reduce operation that we use to implement data-parallelism across nodes. So it doesn't make sense to do this for our system. In general though, this might be a good idea in a system with fewer GPUs per CPU, or a system with a slower RNN implementation.

2.3.3. RNN model parallelism

A final approach to reduce the memory required per GPU is to use model-parallelism to partition individual recurrent layers among multiple GPUs. This approach still expresses the RNN forward and backward propagation operations in terms of matrix multiplications, but performs the individual matrix multiplications in a distributed fashion over multiple GPUs. A relatively large mini-batch size is still needed to make the matrix multiplication operations efficient, but it is divided among multiple GPUs so the effective mini-batch per GPU is reduced. This approach requires expensive inter-GPU synchronizations between each timestep to combine the results of the distributed mutliplication together, so it makes sense only when the recurrent layer is very large, for example, 5000 activations per layer on 4 TitanX GPUs. This approach is complementary to using Persistent RNNs, with Persistent RNNs being more efficient for medium sized layers, and Model Parallel RNNs being more efficient for very large layers.

3. Conclusions

This work demonstrates that RNN weights can be efficiently cached in GPU registers, and that high computational throughput can be attained with this approach. This substaintially improves performance at low mini-batch sizes, enabling training deeper models on the same hardware, and scaling to more GPUs.

3.1. Training deeper models

Reducing the mini-batch size from 64 to 4 provides a 16x savings in activation memory footprint. Most of the memory required to train our speech recognition models is used to store activations for back propagation, not to store network weights, so this savings directly increases the size of the model that we can train. We can now fit deep recurrent networks with 110 layers in GPU memory, which is about an order of magnitude deeper than our previous models with 7 layers.

3.2. Scaling data parallelism to more GPUs

Reducing the mini-batch per GPU also enables data parallel scaling to more GPUs without changing the algorithmic batch size. We commonly train networks with an algorithmic mini-batch size of 512 or 1024, because anything larger slows down model training. Using a mini-batch per GPU of 64 allows us to train a single model to 8 to 16 GPUs, but using a mini-batch size of 4 allows us to train the same model on 128 to 256 GPUs.

3.3. Future Work

The size of layers that can be trained using this approach will likely increase with future GPUs that include more hardware threads, more on-chip memory, and lower precision floating point operations. This work focuses on the simple RNNs used in the Deep Speech 2 networks, but this approach can also be applied to GRUs and LSTMs by distributing the weight matrices among GPU threads.

In principle it is also possible to apply this approach to other types of processors. For example, large GPUs from AMD or Intel can cache recurrent weights in thread register files. Many-core processors like Intel's Xeon and Xeon PHI can cache the recurrent weights in the L1 and L2 caches. FPGAs can distribute the weights in on-chip block RAMs.

We hope that others in the community will find Persistent RNNs to be useful tool for training even bigger and deeper recurrent neural networks on even bigger

datasets than could be done without them.